Measuring methane emissions, part 1: Units

Introduction

Methane emissions are becoming an increasingly hot topic, and for good reason: Methane is the primary component of natural gas, which accounts for 32% of US energy consumption. And as renewables continue to make up a larger fraction of the US energy landscape, natural gas peaker plants are becoming more important for load balancing. Throughout the natural gas supply chain, from production, to compression, transmission, storage, distribution and point of use, natural gas can and does leak into the environment, with potentially significant implications for cost, safety, and regulatory compliance. Moreover, methane is estimated to be responsible for 16% of anthropogenic climate change[1]. Finding and mitigating methane leaks is critical for the US energy infrastructure to remain competitive and improve its carbon footprint.

It’s impossible to fix what you can’t measure, so measuring methane emissions has become a top priority for policymakers. But, under the hood of this fairly straightforward observation is a veritable thicket, in the form of every engineer’s best friend: units. Units are the yardstick by which we measure things: it does not make sense, for instance, to say that a person’s height is “2”. 2 what? Feet? Meters? Attoparsecs? Units are the scale we use to express the measurement. And, in the case of methane measurement, there are a dizzying number of units in common use. Understanding the relationships between these units is not just a matter of using a spreadsheet to convert between them. Some units of measurement are more appropriate to certain types of measurements than others, and some are not even directly comparable. For instance, one popular old-school approach for measuring natural gas emissions is bagging: one places a bag of a known volume over the leaking component, and times how long it takes to fill up. This leads naturally to using units of standard cubic feet per hour (SCFH) On the other hand, gas analyzers or sniffers, such as the SensIt, “inhale” a known volume of air, and determine the amount of methane mixed in with it, leading to the use of parts per million (PPM).

In this blog post, we’ll walk through the physics of various units used in the measurement of methane emissions. Then, in our next post, we’ll discuss how these various units relate to each other. At the end, you’ll know which units can be converted to which and how, but you’ll also understand when to use which measurement.

Leaks and Flow Rates

One of the most common ways of measuring methane emissions is as a flow rate. If a valve is leaking, it is letting out a certain amount of gas every second, or hour. If we measure the volume or mass of the gas that comes out over a defined time period, as with the bagging example above, then we are measuring a flow rate: an amount of gas per unit of time.

One of the most common ways to measure the flow rate of natural gas is kilograms per hour (kg/hr). This is an intuitively simple measure: imagine a bucket filling with water at a constant rate. If we weigh the bucket, and then an hour later weigh it again, we will know the flow rate in kg/hr. kg/hr is a common unit used in academic literature about methane emissions.

Unfortunately, in the case of gases, it’s not quite that straightforward to measure the weight, especially for gases lighter than air like methane. For this reason, it’s common to measure leak rates in units of volume per time, such as standard cubic feet per hour. Here, “standard” refers to “standard temperature and pressure”, because the volume of a gas is dependent on both via the Ideal Gas Law:

STP is defined[2] as 60° F, and 1 atmosphere (14.7 psia) pressure. When standard temperature and pressure are implied, the flow rate is expressed as Standard Cubic Feet per Hour (SCFH). It’s also common for leak rates to be expressed as Standard Cubic Feet per Day (SCFD) and Thousand Standard Cubic Feet per Day (MSCFD). This latter unit is the one we at Insight M use to express leak rates, as it is a well-known unit of natural gas flow rate in the oil and gas industry. Flow rates are relevant to oil and gas operations because they express the “size” of a leak intuitively. Leak rates expressed in this way can be used to directly evaluate important environmental and operational questions, such as “How much money are we losing per day to leaks?” or “What is the impact of our emissions on the climate?”

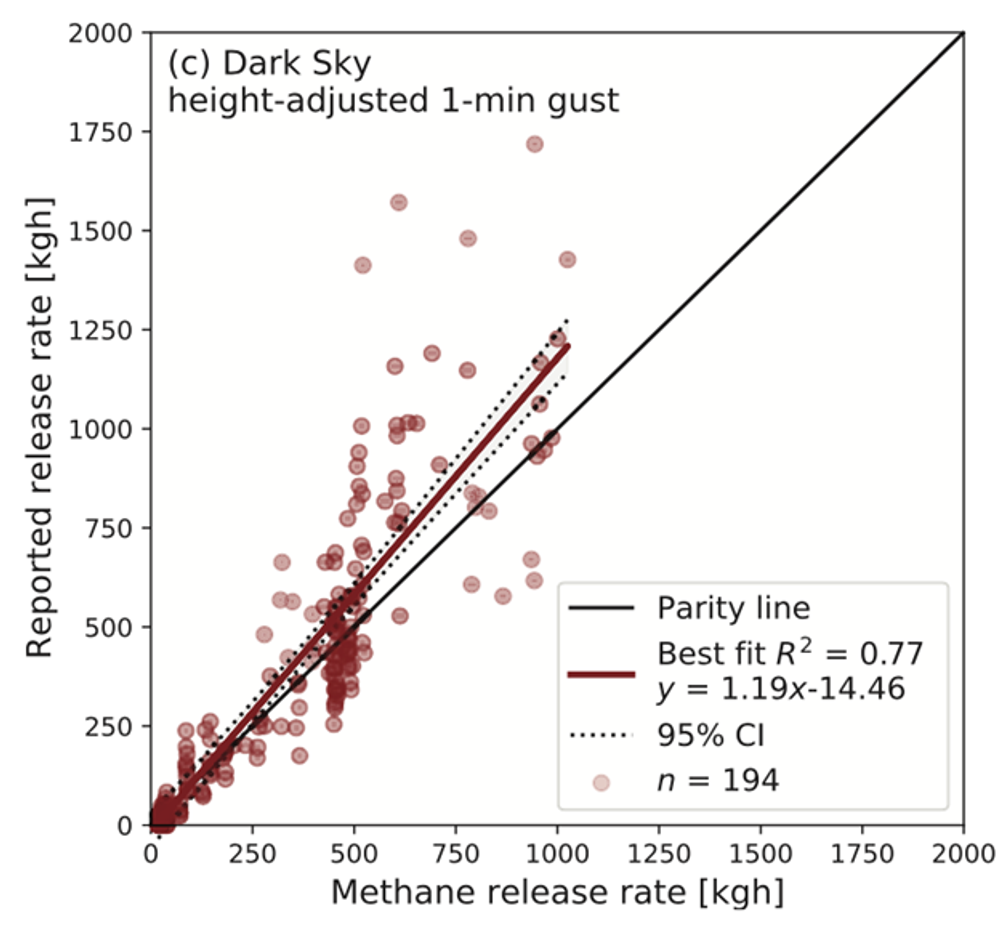

Common simple methods of measuring flow rates are bagging, in the case of leaks, or in the case of controlled flow, by using a flow metering device. Flow meters can measure flow rates either by mass or by volume. Of course, it is also possible to measure gas flow rates remotely using, for instance, hyperspectral imaging, as our LeakSurveyor instrument does. Stanford recently published an independent verification of our leak rate quantification.

Concentration

Another common way of quantifying methane is by measuring its concentration. In general, concentration is a measurement of the amount of some molecule (the solute) in some other medium (the solvent). For instance, if we were to mix a drop of ink into a glass of water, the concentration of ink might be expressed as the ratio of the mass of ink particles in the drop (the solute) to the mass of the water (the solvent.) In cases where there amount of solute is very small, such as a dilute gas in air, it is often convenient to express this in parts per million (ppm). A methane concentration of 1 ppm means that there is one methane molecule for every million air molecules.

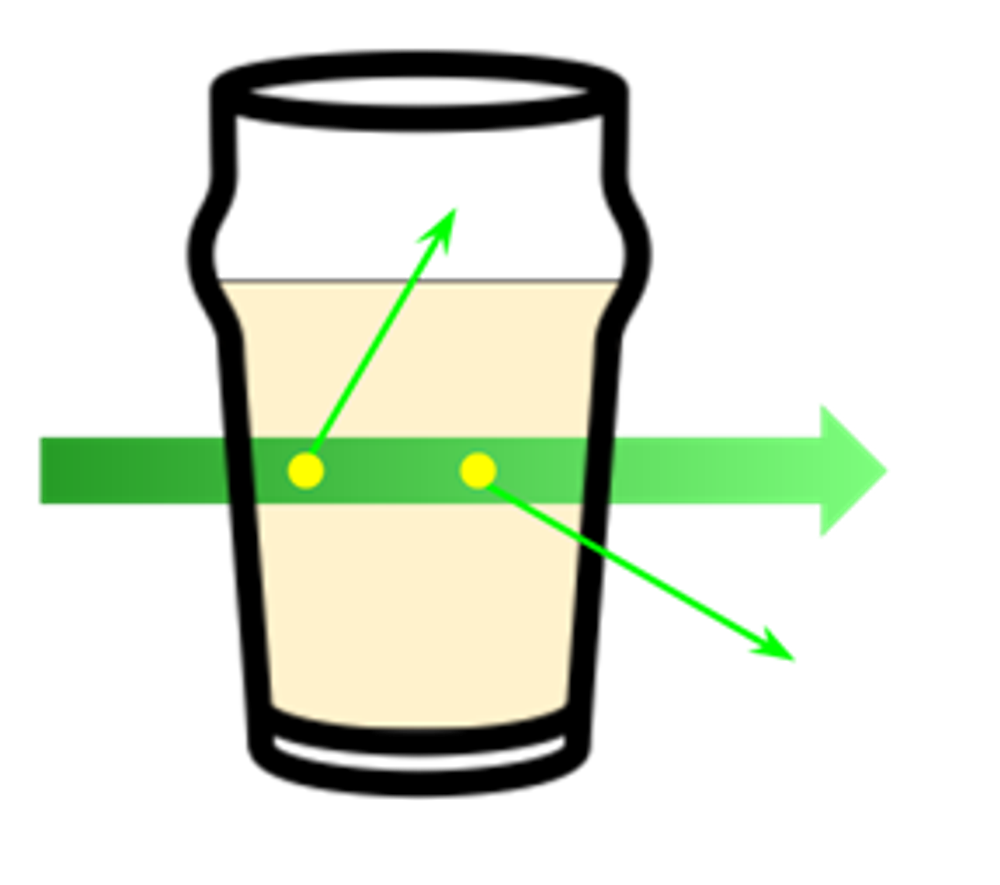

Gas concentration can be measured by a variety of techniques, including small gas detecting chips, sniffers that use gas spectroscopy to measure concentration in a known volume, and laser sensing systems that measure the absorption of a probe beam by a cloud of gas. In the case of “open path” laser systems, the measured quantity is actually proportional to the distance the laser travels through the gas before being detected. Imagine a beam of light passing through a glass of liquid:

The further the beam propagates through the liquid, the more chances it has to encounter solvent molecules, which can absorb or scatter photons out of the beam path, leading to lower observed light intensity. This principal is known as Beer’s Law, which is sometimes colloquially expressed as “The bigger the glass, the darker the brew, the harder it is for light to get through.”[3] As a result, open-path systems will often report concentration as parts per million – meter (ppm‑m), which takes into account the effect of path length on the measurement. Dividing by the path length in meters gives us back units of ppm.

Environmental health and safety (EHS) regulations are often expressed in terms of ppm limits. This is because the combustibility and toxicity of a gas are most easily understood in terms of local concentration, and these are the primary concerns from an EHS standpoint.

Converting and Comparing Units

Converting amongst all these units can be a huge headache, and often stymies comparisons between various methodologies and studies. Fortunately this page put up by Highwood Emissions Management to convert amongst various leak rate measurements does the hard work for you. The app only works for flow rate measures; it doesn’t allow conversion to concentration units, such as ppm or ppm‑m. By this point in our tour of units, you know why: these units aren’t actually comparable, because they measure different things. To recap: Flow rate measurements, such as MSCFD, tell you how much is coming out per time, and are the right way to answer the question How big is this leak? Concentration measurements, like ppm, measure How much of this gas is here? and are appropriate for answering questions such as How dangerous is this leak?

In our next post on this topic, we’ll explore in greater depth how leak rate and concentration measures differ, why they’re so incompatible, and the problems associated with using concentration measures for quantifying leak rates.

Footntes

See https://gml.noaa.gov/aggi/aggi.html for details. I computed this directly from the latest values given for radiative forcing of various GHGs, but it’s a very complex topic, and one may find numbers for this value ranging from 10% to 40% in the literature.

Actually, there are several conventions for what the “standard” temperature is, including 0° C, 70° F, and 25° C. The wonderful thing about standards is that there are so many to choose from. In the context of natural gas, 60° F is almost always the standard temperature.

Sadly, this is what passes for humor among physicists.